Control the end-to-end AI lifecycle

Run evaluations, monitor behavior, and fix issues instantly, without redeploys.

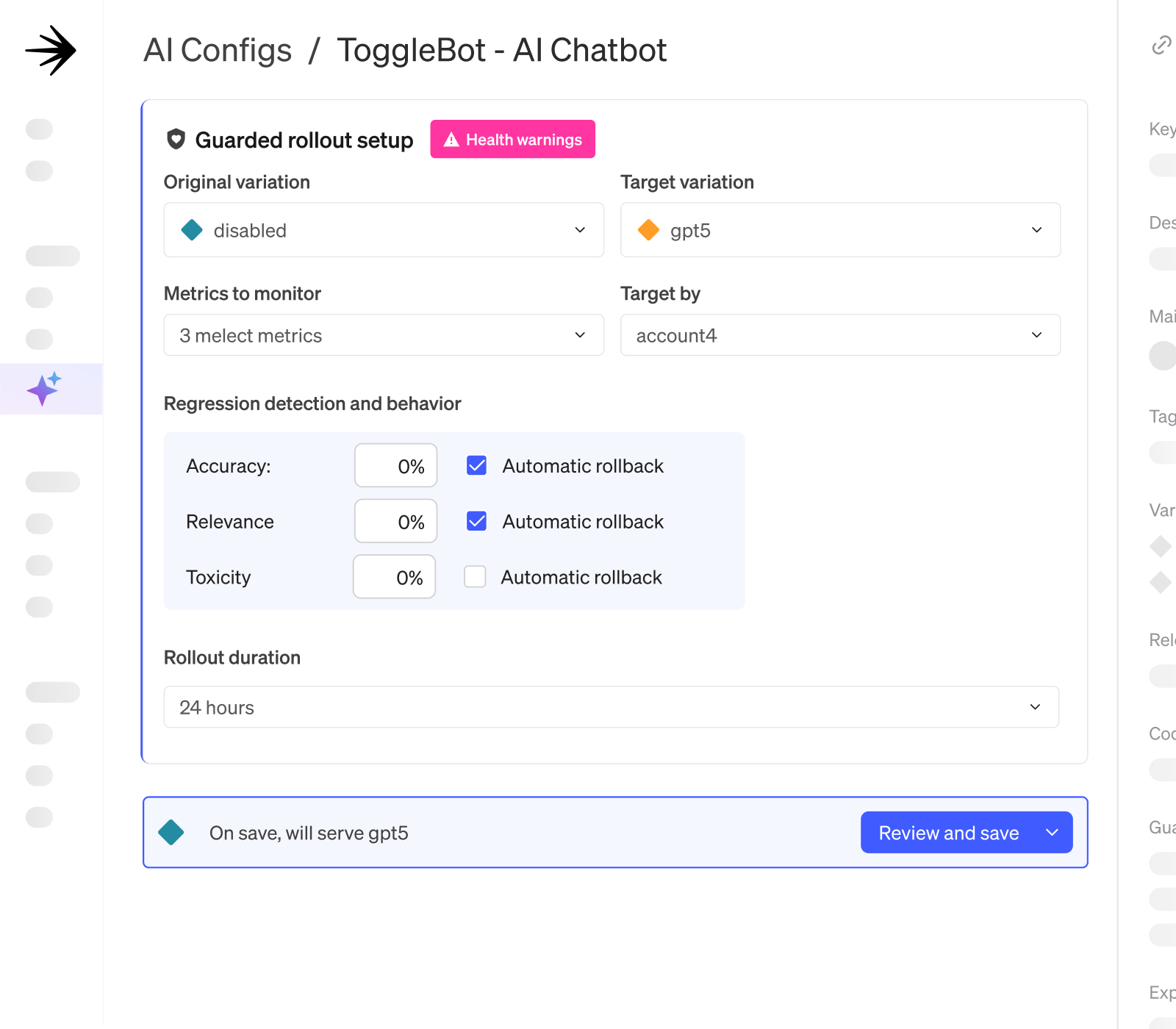

Validate changes safely.

Evaluate offline, then prove with holdouts, shadow traffic, and pass/fail rules before you scale.

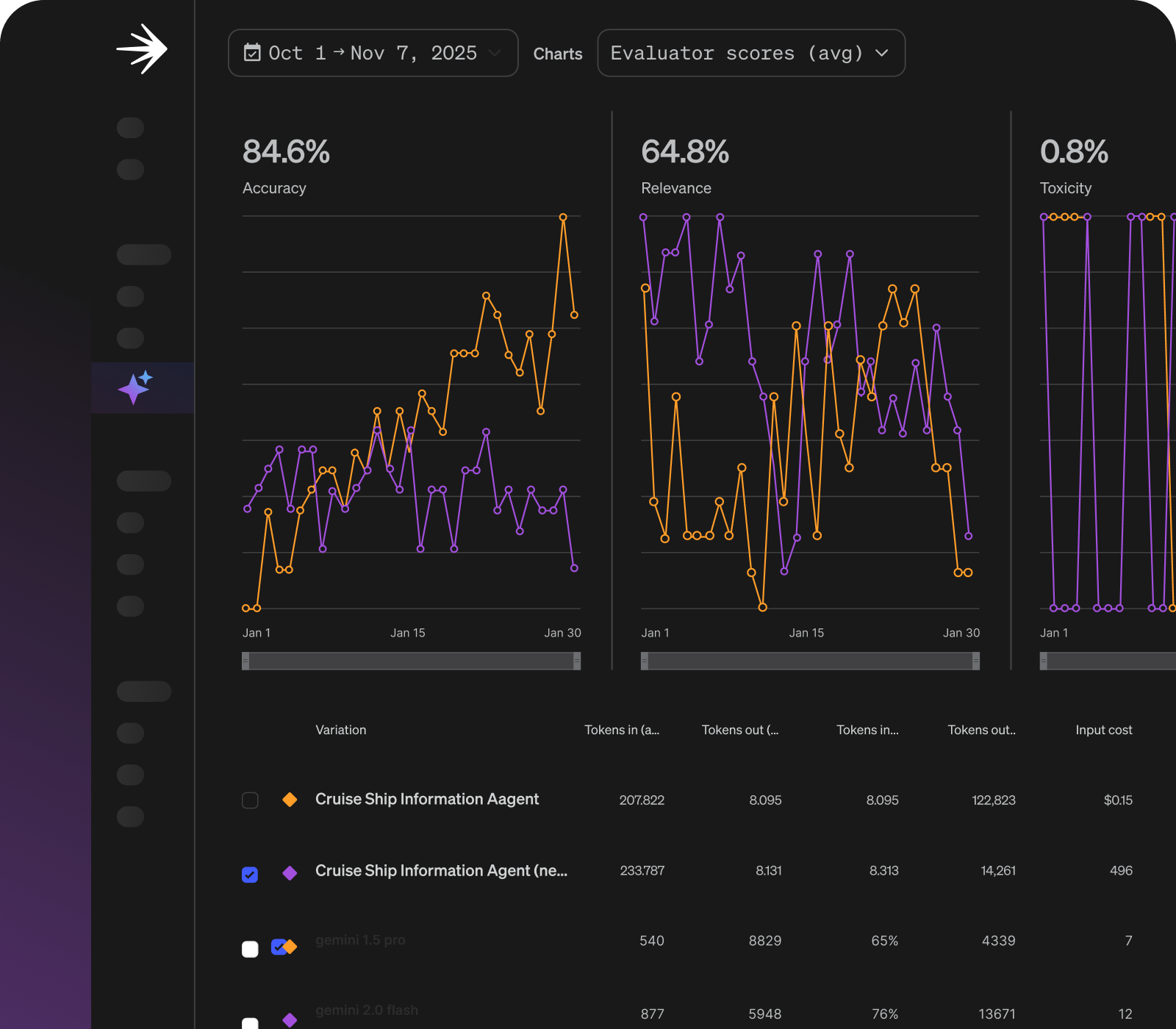

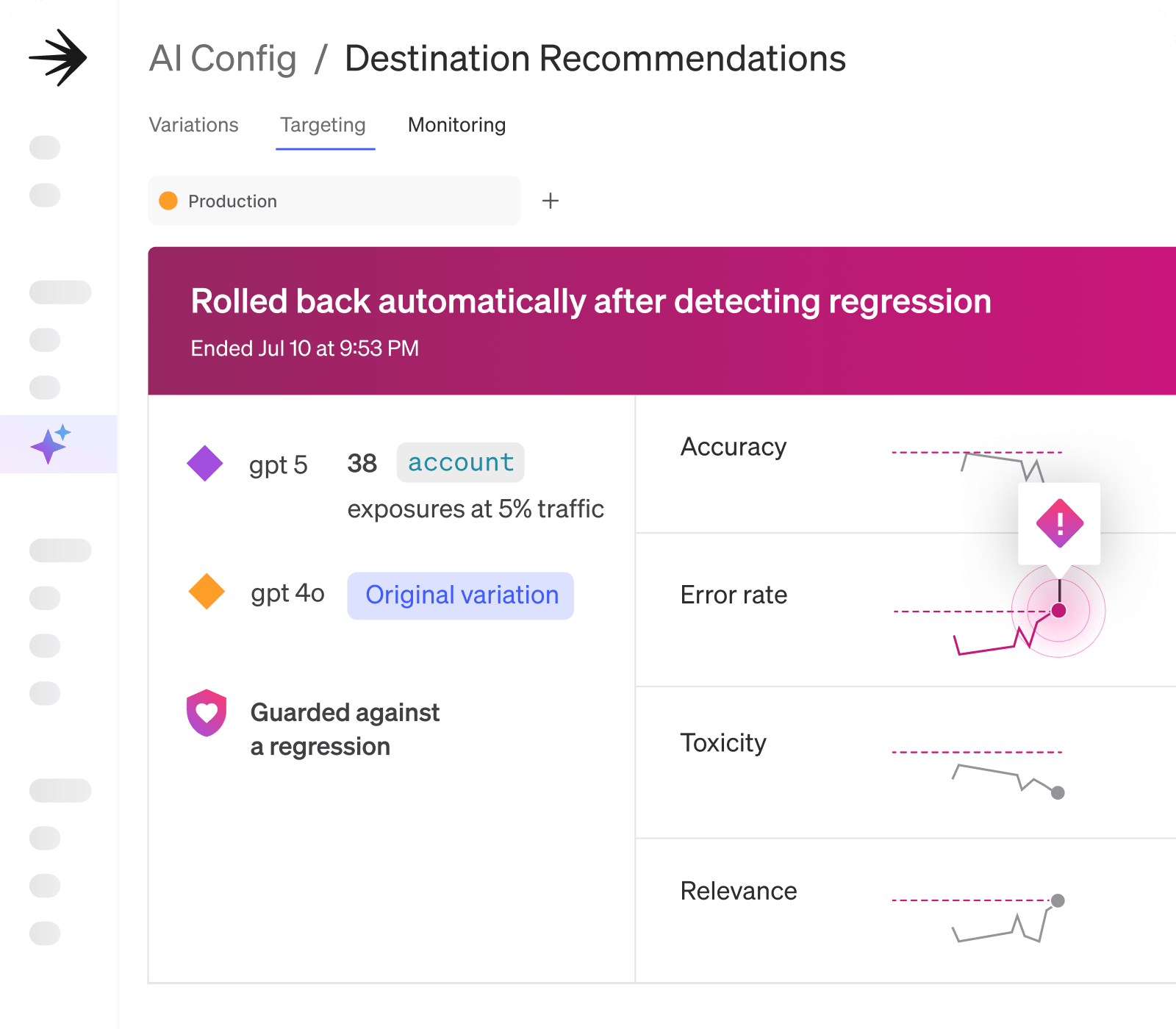

Spot regressions fast.

Detect quality or behavior changes with judge scores and traces, see exactly what changed, and get actionable alerts.

Fix without redeploys.

Restore the last known good variation or redirect traffic in seconds – no code push required.

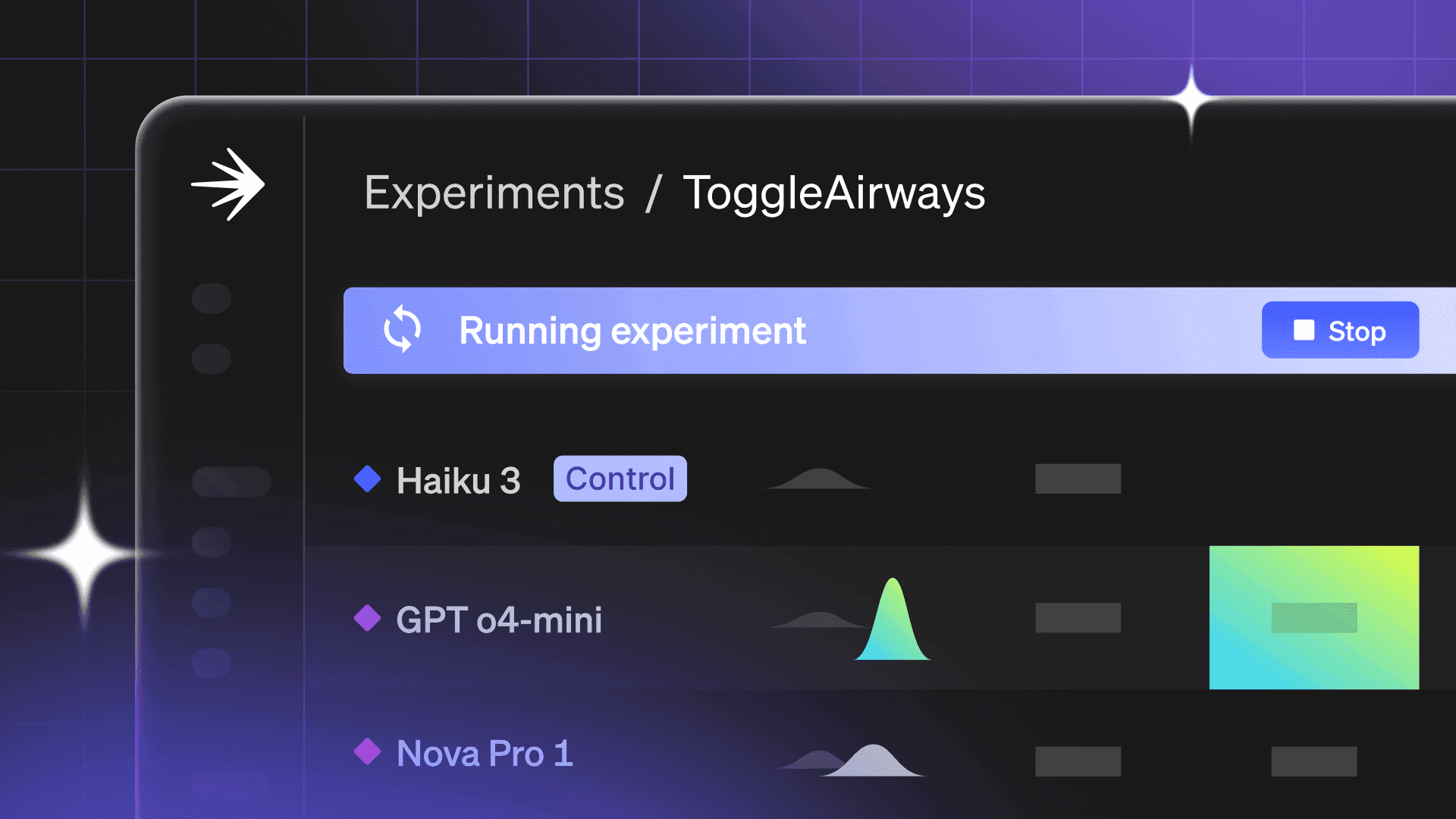

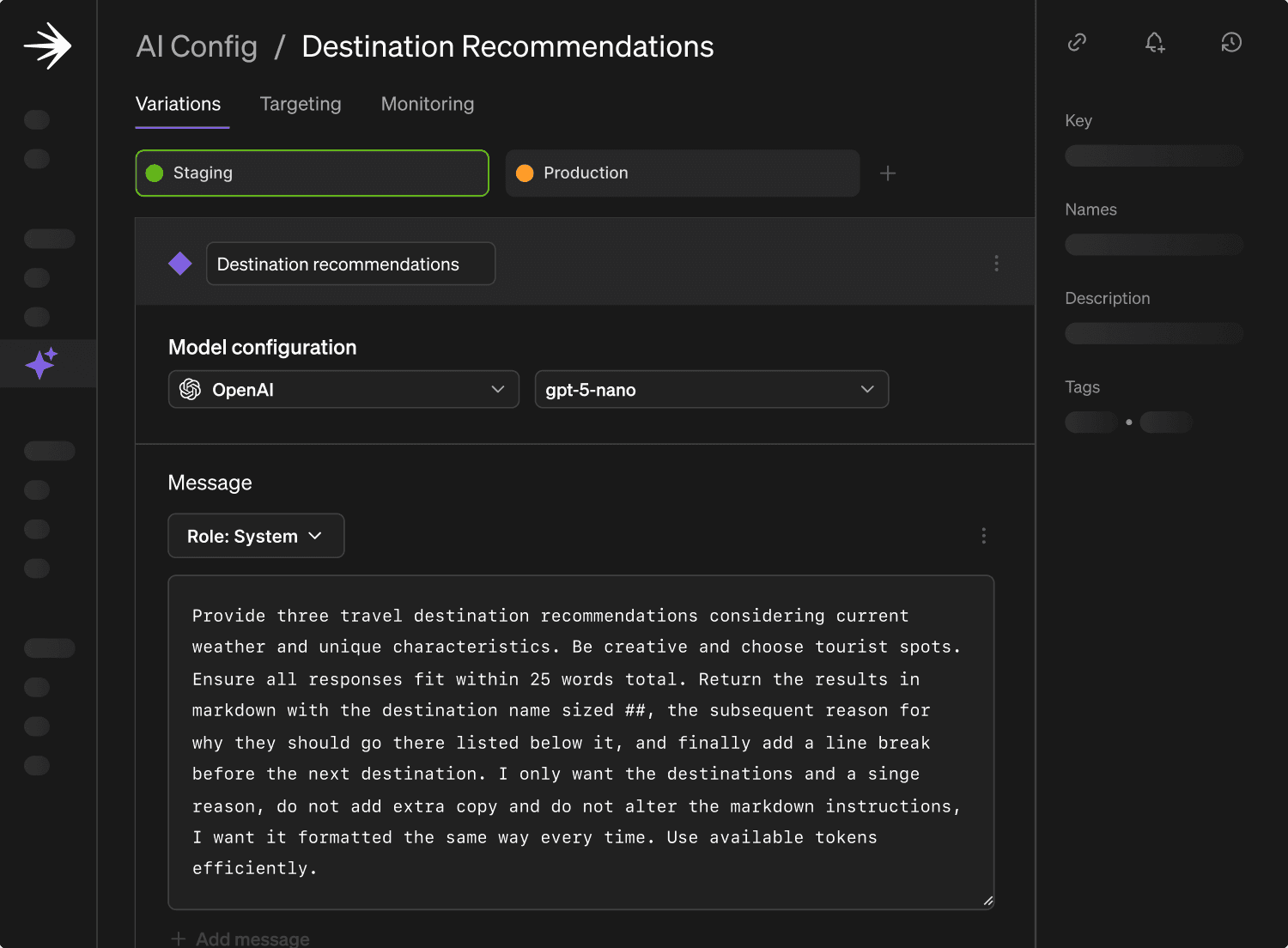

Validate changes safely

Evaluate offline, then prove with real traffic. Scale only what works.

Validate changes safely

Evaluate offline, then prove with real traffic. Scale only what works.

Start in a sandbox.

Use offline evals to pre-vet changes before production checks.

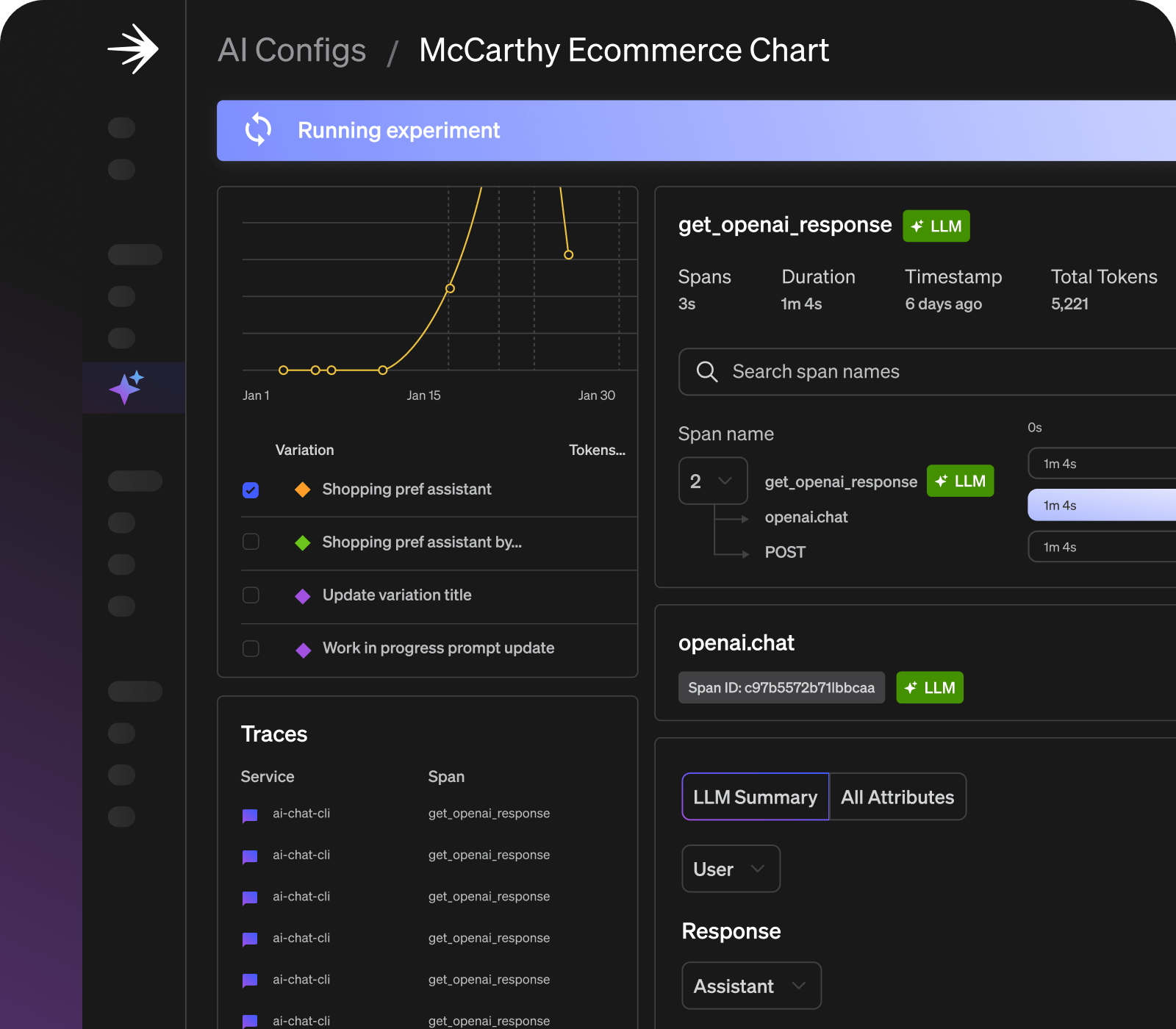

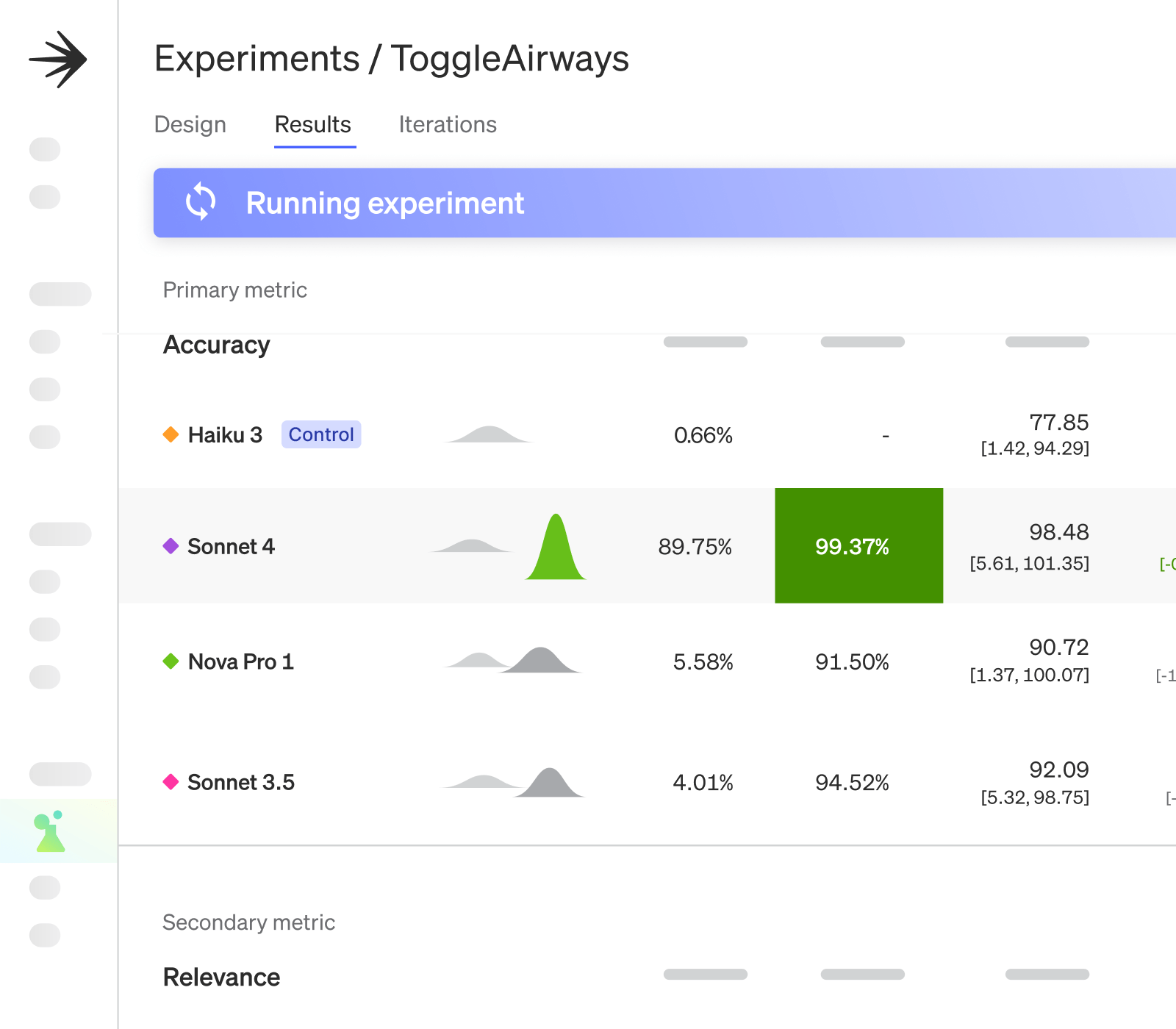

Compare with real traffic.

Run holdouts, shadow tests, or targeted rollouts with LLM-aware observability to validate under live conditions.

Promote with confidence.

Use quality, latency, and cost thresholds to decide when to scale up or roll back.

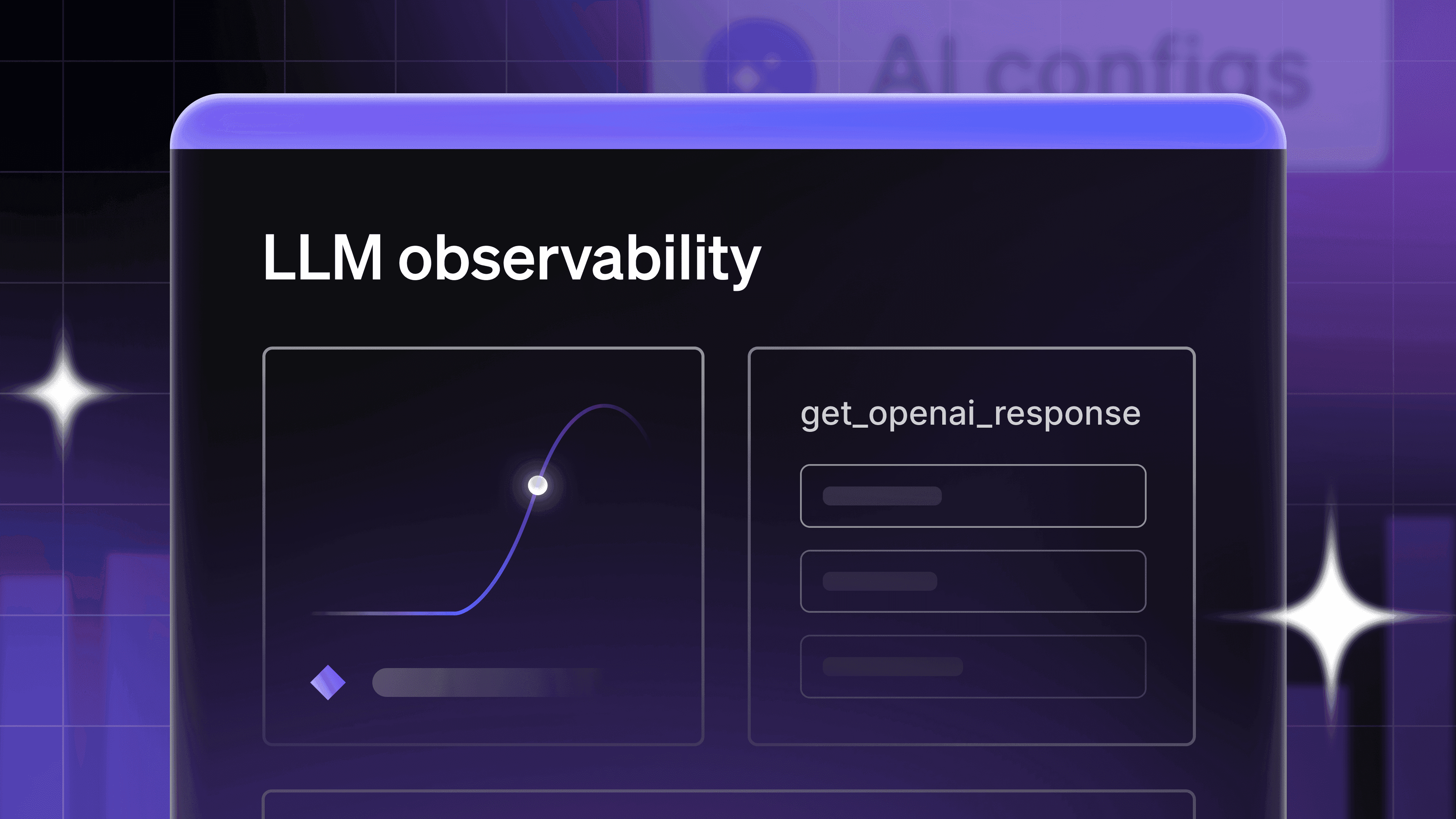

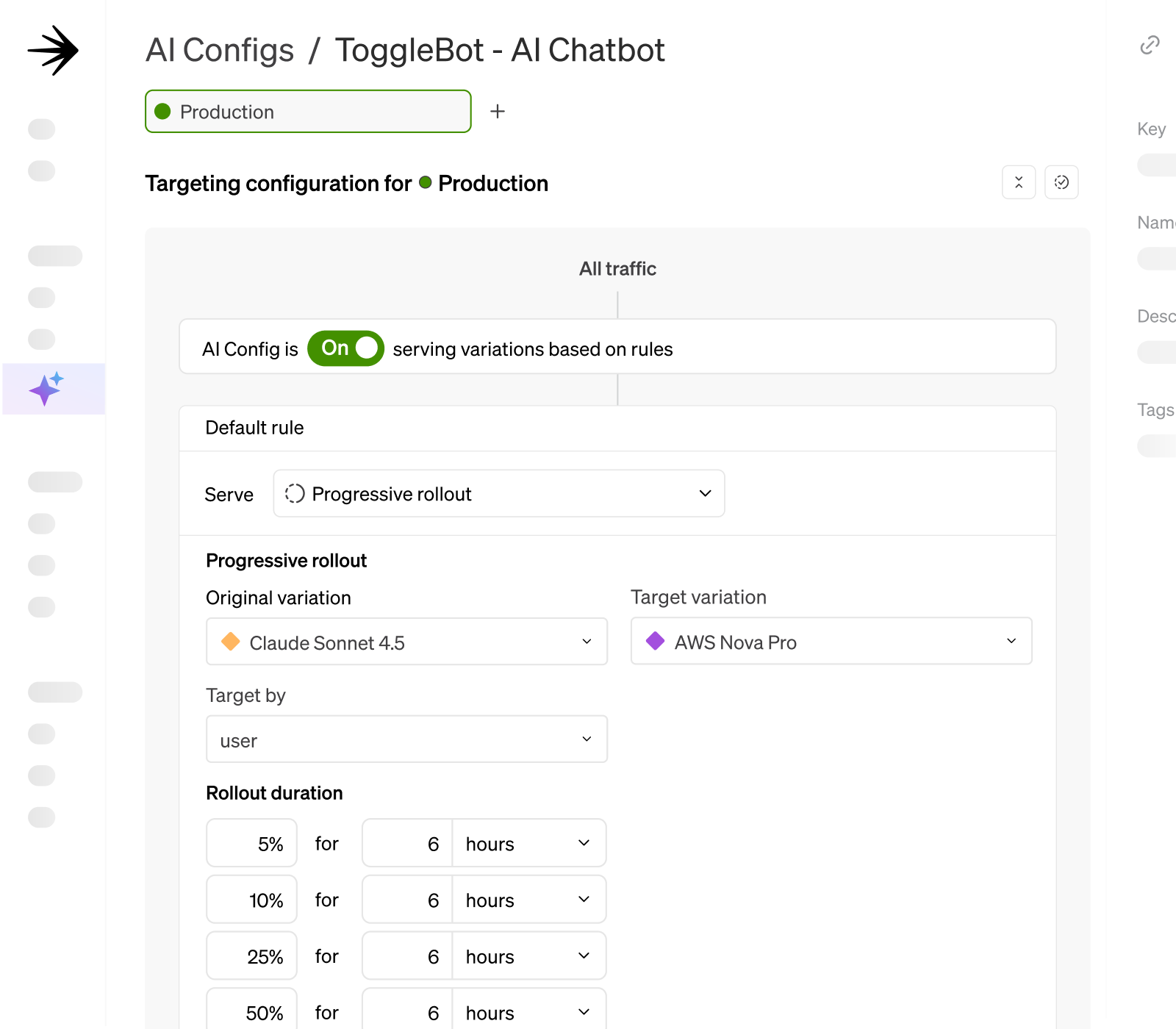

Spot regressions fast.

Track how quality shifts over time, trace what changed between versions, and know exactly when to take action.

Spot regressions fast.

Track how quality shifts over time, trace what changed between versions, and know exactly when to take action.

Track the right metrics and thresholds.

Judge scores, latency, tokens, and cost per configuration or version.

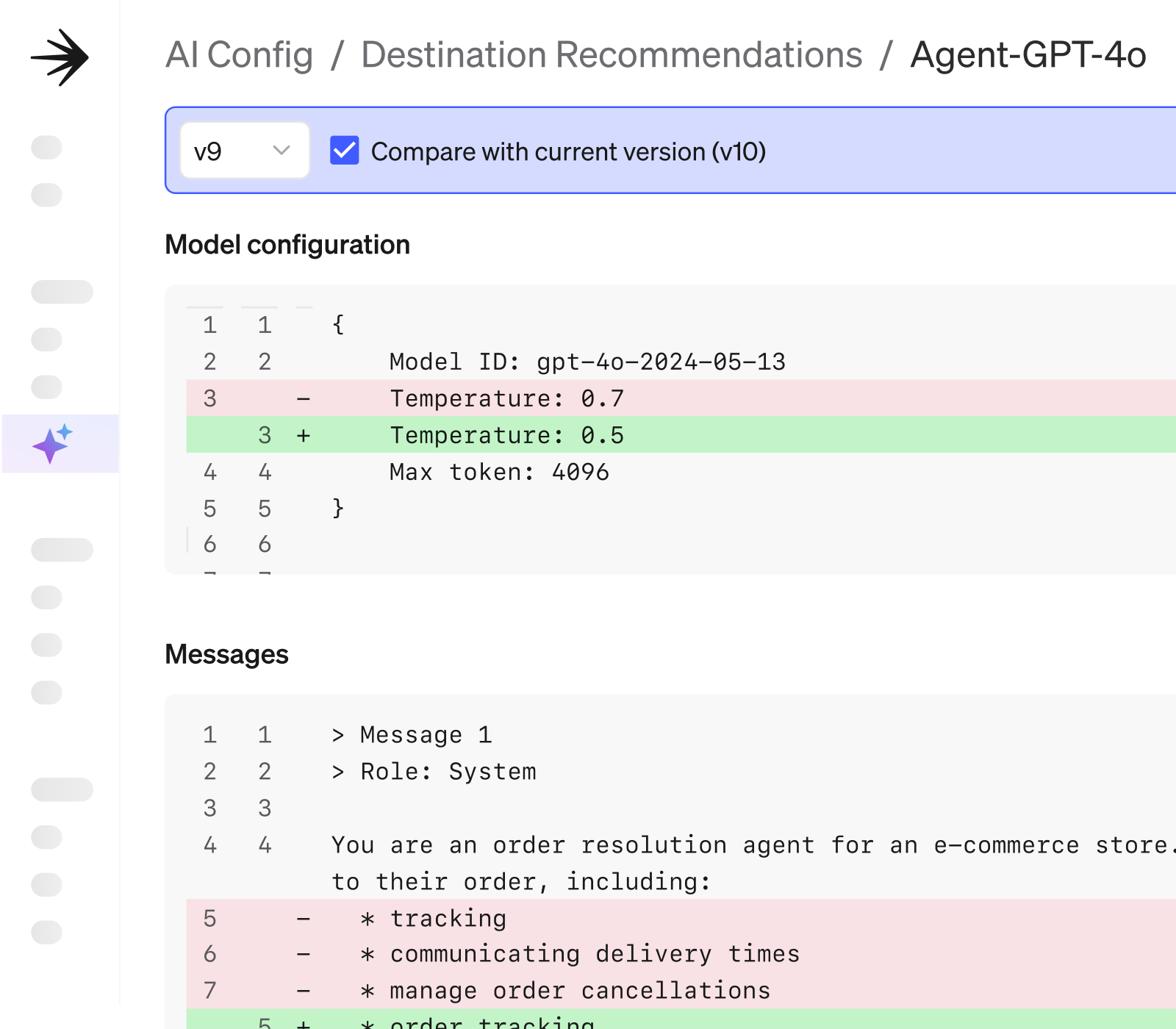

Pinpoint the cause.

Follow completions, tool calls, and prompt flows; compare versions to see which change triggered the shift.

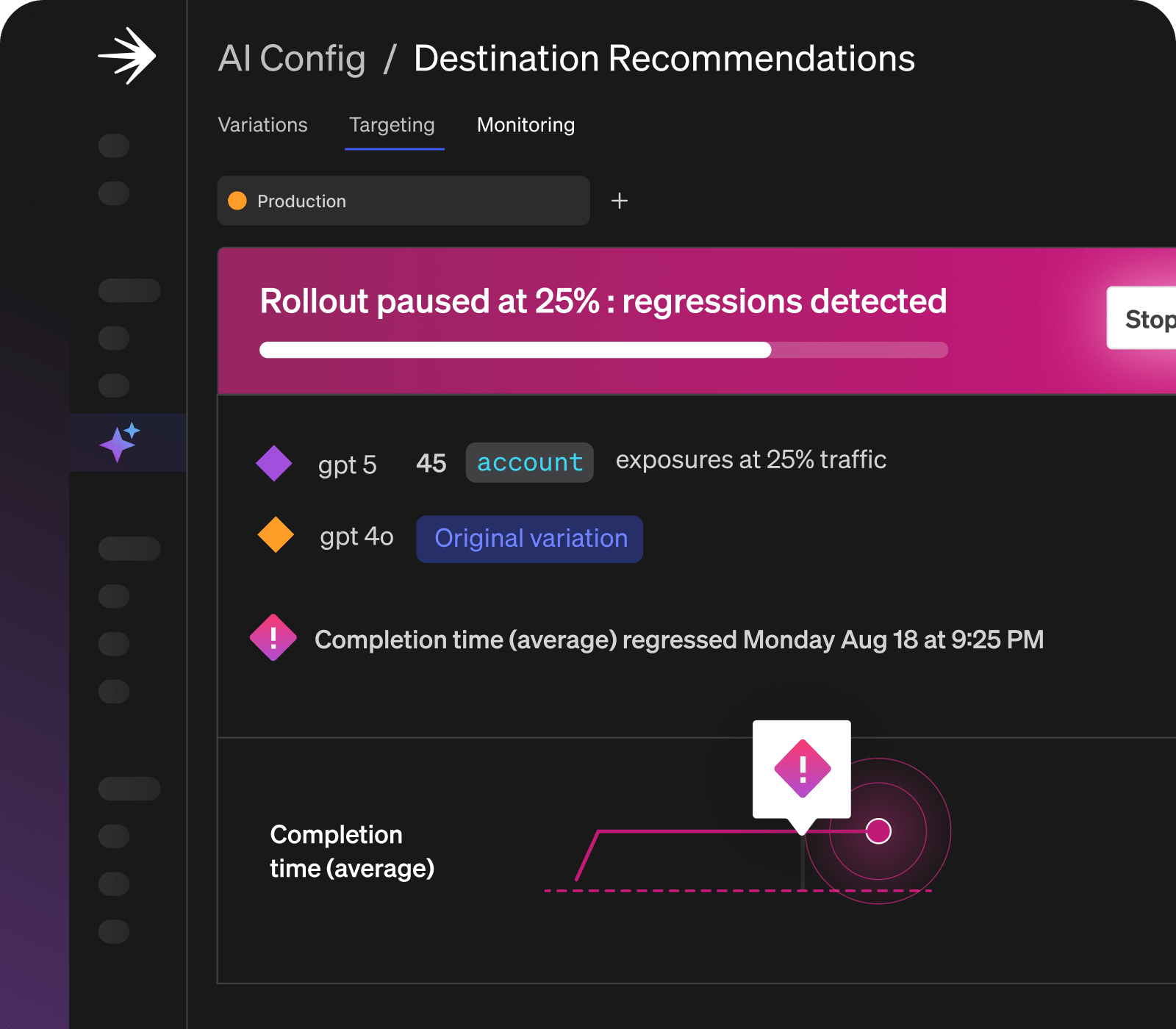

Alerts you can act on.

Get notified on thresholds and choose to pause exposure, investigate deeper, or roll back instantly.

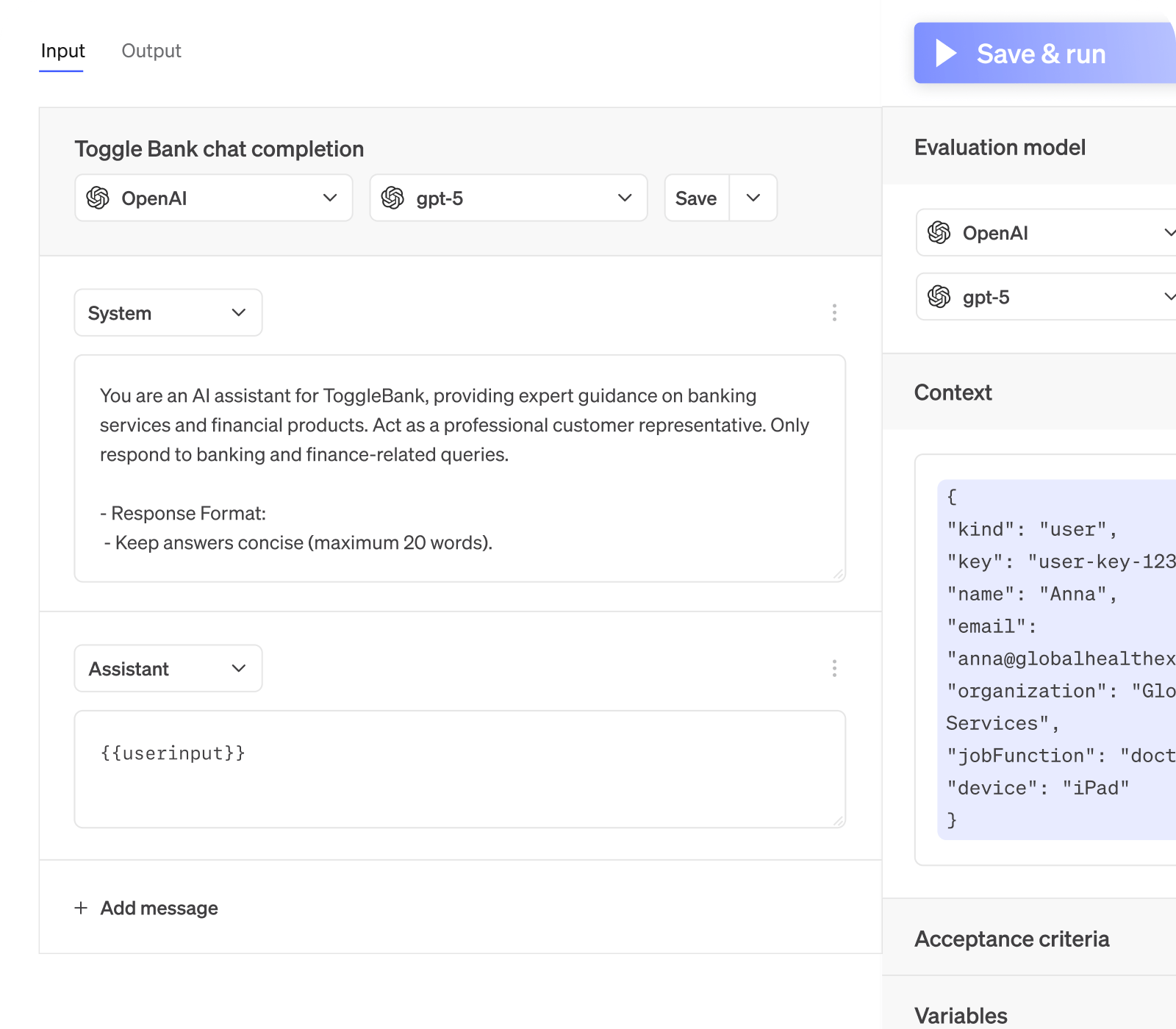

Fix it without redeploys.

Restore a healthy state or reroute traffic fast: no code push required.

Fix it without redeploys.

Restore a healthy state or reroute traffic fast: no code push required.

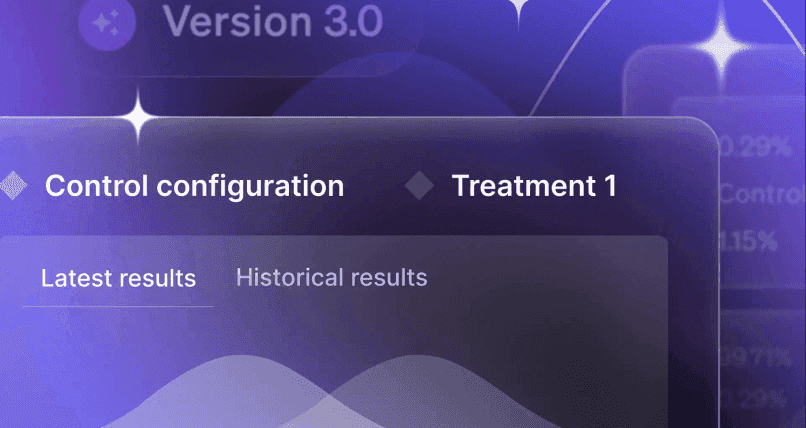

Restore from last known good.

Roll back immediately to the most recent healthy variation.

Identify what works best.

Use targeting rules and guardrails to find the right model or configuration for quality, speed, and cost.

Edit safely at runtime.

Update prompts, parameters, or models with approvals and an audit trail.

Learn more about AI Configs

Evaluate, observe, and improve your AI lifecycle – without redeploys.