The next generation of software is already being built with generative AI, whether it's integrated directly into applications, or used as a tool to accelerate development. Builders can already address AI use cases with LaunchDarkly, and our users have shared feedback about the demands they’re under to change, test, and optimize models and configurations to ensure the best possible performance.

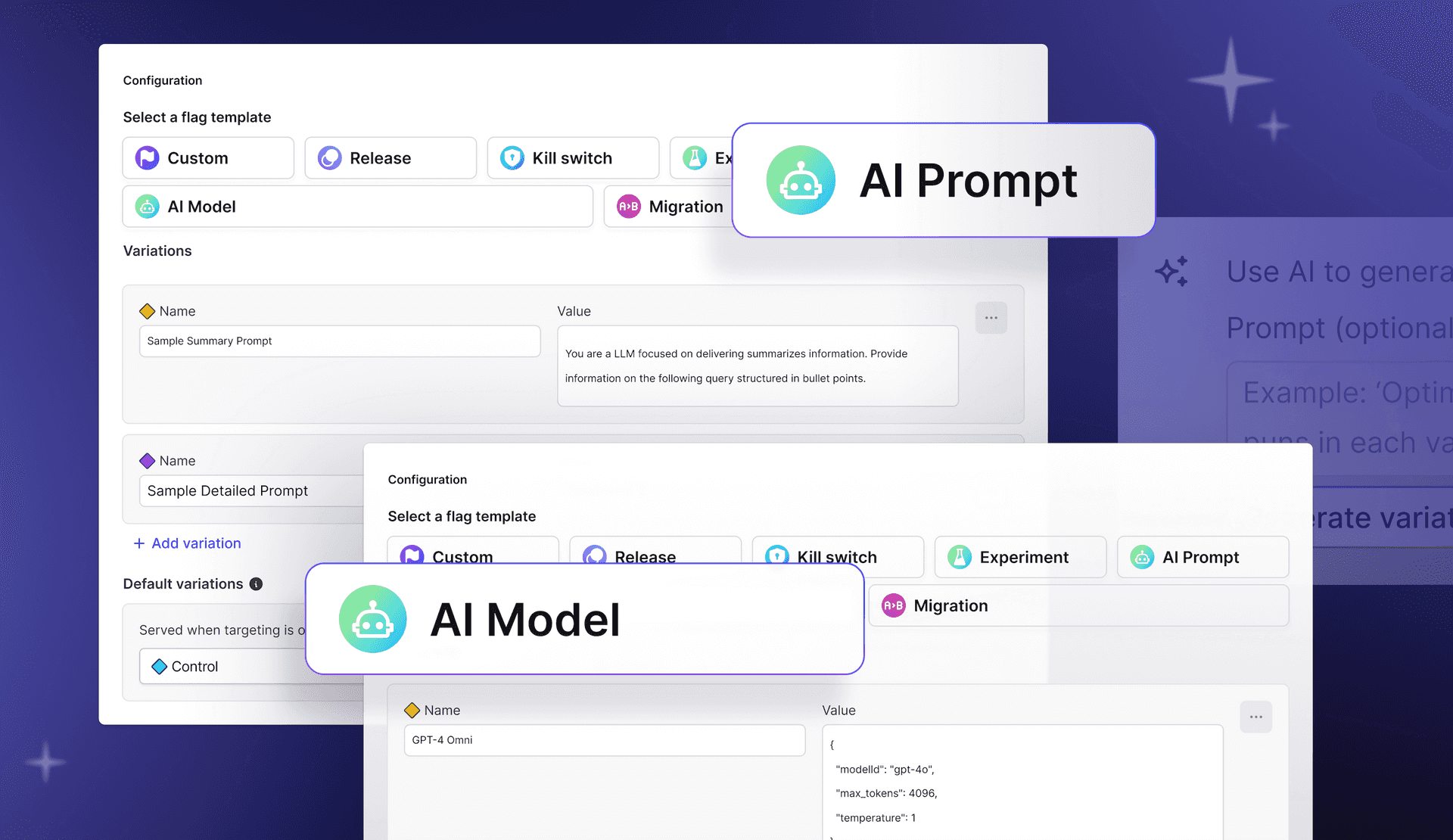

What if getting started with a new AI model was as easy as adding a variation to a flag and flipping it on? And what if you could do the same combining models and prompts? What if when you wanted to figure out if the new model or prompt you were releasing actually performed better, it was as easy as creating a new experiment and pointing at it? To make it even easier to build AI products and features with LaunchDarkly, we’re excited to announce new AI model and AI prompt flag templates, now available in GA via our Feature Preview interface.

The new flag templates are designed to help AI builders to release, monitor, measure and iterate on models and prompts to find the optimal combination for your use case. Here’s what you can do with the new flags.

AI model flags

You can use AI model flags to iterate on both models and model configurations so that you can introduce models from new vendors, or iterate on configurations of existing models. For example, you might be using OpenAI’s GPT-4 Turbo model today, but want to be able to switch into GPT-4 Omni, and apply specific configurations to influence the accuracy and creativity of responses, or even set a maximum token generation.

AI model flags are JSON flags that can be as simple or as complex as you want. You might want to only control the modelID field to identify the model in use, or one or more configuration parameters that are specific to the LLM (For example, many LLMs contain parameters for temperature, max_token, top_p, or top_k—each LLM provider uses different parameters).

Let’s say you have a customer-facing chat application that includes several AI models (Claude 3 Haiku, Claude 3 Sonnet, and Claude Instant 1.2) within its server-side API configuration. Your LaunchDarkly SDK will evaluate the AI model flag, and return back the configuration for the AI model flag. These configurations can be passed into your LLM as part of an API request. New configurations can be tuned within LaunchDarkly directly at runtime without ever having to redeploy.

AI prompt flags

Similar to AI model flags, you can use AI prompt flags to iterate on prompt configurations as well as to experiment with different configurations to improve the results you receive from interactions with your models. Prompt adjustments are happening often as we learn more about the most effective ways to interact with each other.

Prompt flags are a JSON flag that are pre-setup with “System” and “User” contexts to help frame out the optimal prompt configuration. These can be expanded as needed, and passed into the prompt or messages field within your LLM configuration.

For example, let’s say you have an education application where users can ask an AI assistant to summarize a corpus of text from a textbook. You can create your “System” and “User” configurations, and then leverage release targeting rules to align to prompts designed for the different reading levels of students. When the LaunchDarkly SDK evaluates the AI prompt flag, it will parse the flag variation returned, and deliver the correct prompt to your LLM configuration. The AI model will receive correct prompt and end-user content, and your end users will get a response corresponding to their reading level.

Get access to the new AI flags

The new AI flag templates are in GA today for Starter, Pro, and Enterprise plans and available within LaunchDarkly’s “Feature Preview” interface (Note: The flags are not yet available in LaunchDarkly Federal, the FedRAMP-authorized instance of LaunchDarkly). Simply select your user icon, and select Feature Preview. From there, enable the new feature and create your new feature flags today! To learn more, read the documentation for AI model flags and AI prompt flags.

Beyond the new flag templates, LaunchDarkly already offers several ways to help manage releases and iteration for AI products and features. For example, you can experiment with different combinations of models, prompt configurations, and more to find the optimal product experience. You can customize entitlements and access by reusable audience segments using custom contexts or use your own data to manage your audiences using our CDP integrations. And you can detect errors in model and prompt behavior by using Release Guardian, recovering in real time.