A primer for software engineering leaders on de-risking, controlling, and optimizing AI applications in production

The race to find profitable use cases for generative AI continues. According to an Ernst & Young survey, every single financial services leader surveyed (100%) said they are already using or planning to use generative AI (GenAI) at their organizations.

Financial institutions must be especially careful in how they deploy this powerful technology, owing to the sensitive data, precious assets, and regulations characteristic of their industry. If banks, brokerages, fintechs, wealth management firms, and insurance companies are to capitalize on GenAI, they need ways to control it in production.

This article details how to get that control. I’ll explain how developers at financial institutions building AI applications upon large language models (LLMs) like GPT, Claude, and Gemini, can control, optimize, and mitigate risk in those applications.

1. Mitigate risk with feature flags

Rolling out GenAI features to production traffic without proper controls can expose you to compliance violations, downtime, or hallucinations. Feature flags offer a powerful solution to these risks.

Decouple deployments from releases

A key strategy for delivering AI features safely is to decouple the deployment of those features to production servers from the release to end users (i.e., a dark launch). Deploy first to ensure the AI doesn’t break production. Then release.

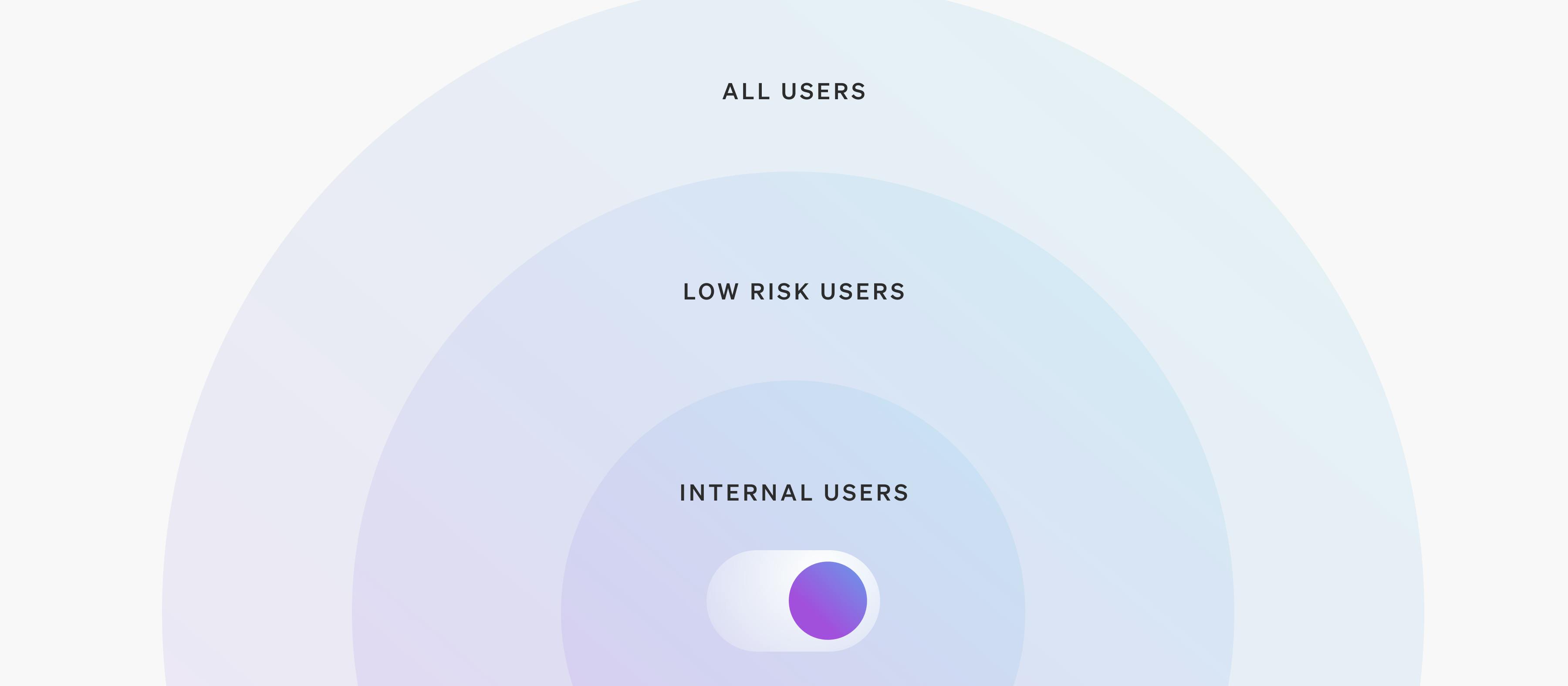

For example, a bank might deploy a chatbot powered by an LLM like GPT-4, but only enable the feature for internal users initially. This lets developers validate the AI’s performance in a more realistic environment. It also avoids exposing the chatbot to the public before it is proven reliable.

Progressively deliver for safe testing

Financial institutions can also progressively deliver AI features, using feature flags to control which users receive the new capabilities. Start by releasing to a limited set of users—such as internal teams, early access users, or customers in a specific region. This lets you observe how the model is performing in a controlled setting before a full-scale rollout.

For instance, a wealth management firm could roll out a GenAI-powered investment advice tool to its most tech-savvy customers first. This lets them gather feedback and make real-time adjustments to system prompts or model parameters based on actual usage data. And if something goes wrong, the issue would have only impacted a small group of users.

Instantly disable faulty AI features with a kill switch

At times, GenAI systems can spit out hallucinations or degrade system performance. Using feature flags, you can flip a kill switch to disable problematic AI features.

For example, if an AI-powered fraud detection system begins misclassifying legitimate transactions as fraudulent or slowing down transaction processing, engineers can immediately toggle off the feature in runtime. By disabling the feature without triggering a CI/CD rollback, the team instantly remediates the issue and protects the customer experience.

2. Rapidly iterate on models and prompts with AI-specific feature flags

Building and delivering excellent generative AI features requires constant tuning, customization, and adoption of new models. By using the LaunchDarkly AI model feature flags and AI prompt flags, financial services organizations can quickly iterate on AI features in real time, without the need for redeployments.

Seamlessly swap out models and tune parameters

New models and configurations are released frequently. Developers must adopt the latest versions to avoid the flaws of outdated models. With AI model flags, your team can rapidly switch between different LLMs (e.g., GPT vs. Claude) and adjust tunable parameters like model temperature and token count. This agility ensures you stay competitive and can optimize for cost, accuracy, and performance.

Dynamically engineer system prompts

System prompts, which instruct the AI on how to behave, need continuous refinement. AI prompt flags allow developers to update prompts dynamically in the production environment. For example, if a bank's GenAI chatbot is failing to adhere to compliance guidelines, developers can adjust the system prompt immediately.

Being able to seamlessly update system prompts in production without requiring customers to refresh their app creates a better experience.

Here’s another use case to consider: an insurance company using AI for claims processing could rapidly switch to a newer, more accurate model that better detects fraudulent claims. Similarly, a banking chatbot's prompts could be rapidly updated to reflect new product offerings or regulatory requirements without service interruption.

3. Optimize AI performance through experimentation

To help ensure that GenAI applications deliver maximum value at a reasonable cost, financial services firms should experiment with a variety of models, prompts, and configurations.

A/B test to increase conversions

For customer-facing applications like AI financial advisors, running A/B tests allows you to compare different versions of the model and its configurations. For example, one model may return more concise answers and use fewer tokens. While another model may offer longer, more personalized advice that drives higher conversions.

Through experimentation, engineering teams can refine the AI’s behavior while balancing utility with cost. (You can run these kinds of experiments in LaunchDarkly.)

Run experiments to help improve fraud detection and risk management

For AI-powered fraud detection systems, performance and accuracy are critical. Experimenting with different model configurations can help determine which setups minimize false positives while maintaining low-latency transaction processing. Through continuous experimentation, financial services firms can improve the system’s ability to accurately detect fraud in real-time.

Consider another use case: a fintech company could run experiments on its AI credit scoring model, comparing traditional methods with new AI approaches. By measuring approval rates, default risks, and regulatory compliance, they can make data-driven decisions on which model to adopt.

4. Personalize GenAI features to improve customer experiences

With the LaunchDarkly targeting engine, you can customize AI applications to different user groups based on any attribute you can imagine: device type, mobile app version, location, customer tier, financial profile, and so on.

Customizing AI experiences by user segment

For example, a fintech company might tailor its GenAI trading assistant based on the user’s investment preferences or subscription tier. Premium users could receive advanced, personalized financial advice, while other users might get more basic recommendations. Such personalization increases customer satisfaction and retention.

Selectively disable AI features for cost and performance optimization

Financial services teams can also selectively disable AI features for certain users to manage costs and improve system performance. For example, if an AI feature causes excessive latency on older devices, you can turn off the feature for those users while keeping it live for others. This avoids disrupting the overall user experience while optimizing system performance and cost-efficiency.

Use targeting to help maintain regulatory compliance

Engineers can also use targeting to adjust AI behavior to comply with varying regulations across different jurisdictions or customer types. For example, an AI lending platform could be configured to follow stricter lending guidelines for specific states or regions. Or it could provide transparency in line with local consumer protection laws. By dynamically modifying AI behavior to fit regulatory environments, financial institutions can better navigate compliance complexities.

Drive AI innovation with LaunchDarkly

As financial services firms integrate generative AI into their applications, the need for control, flexibility, and safety increases. Through feature management and experimentation, software engineering teams can decouple AI deployments from releases, progressively deliver AI features, and use kill switches to disable problematic functionality.

What’s more, teams can rapidly iterate on AI models and prompts. They can optimize model performance through experimentation, thus driving innovation without compromising on performance or accuracy. And with targeting and personalization, financial institutions can fine-tune AI behavior for different user segments, enhance customer experiences, and navigate complex regulatory environments.

By incorporating these strategies, engineering leaders in financial services can unlock more potential from generative AI.