LLM observability

Overview

This topic explains how to instrument your application with OpenLLMetry and the LaunchDarkly SDK. It also explains how to use LaunchDarkly observability features to view and analyze large language model (LLM) spans.

LLM observability provides visibility into how your LLMs behave in production. Each model request generates spans that capture the prompt, response, latency, token usage, and related metadata to help you understand how the model performed. With this information, you can investigate model performance, identify latency or error patterns, and understand how model versions or configurations can affect output quality and operational cost.

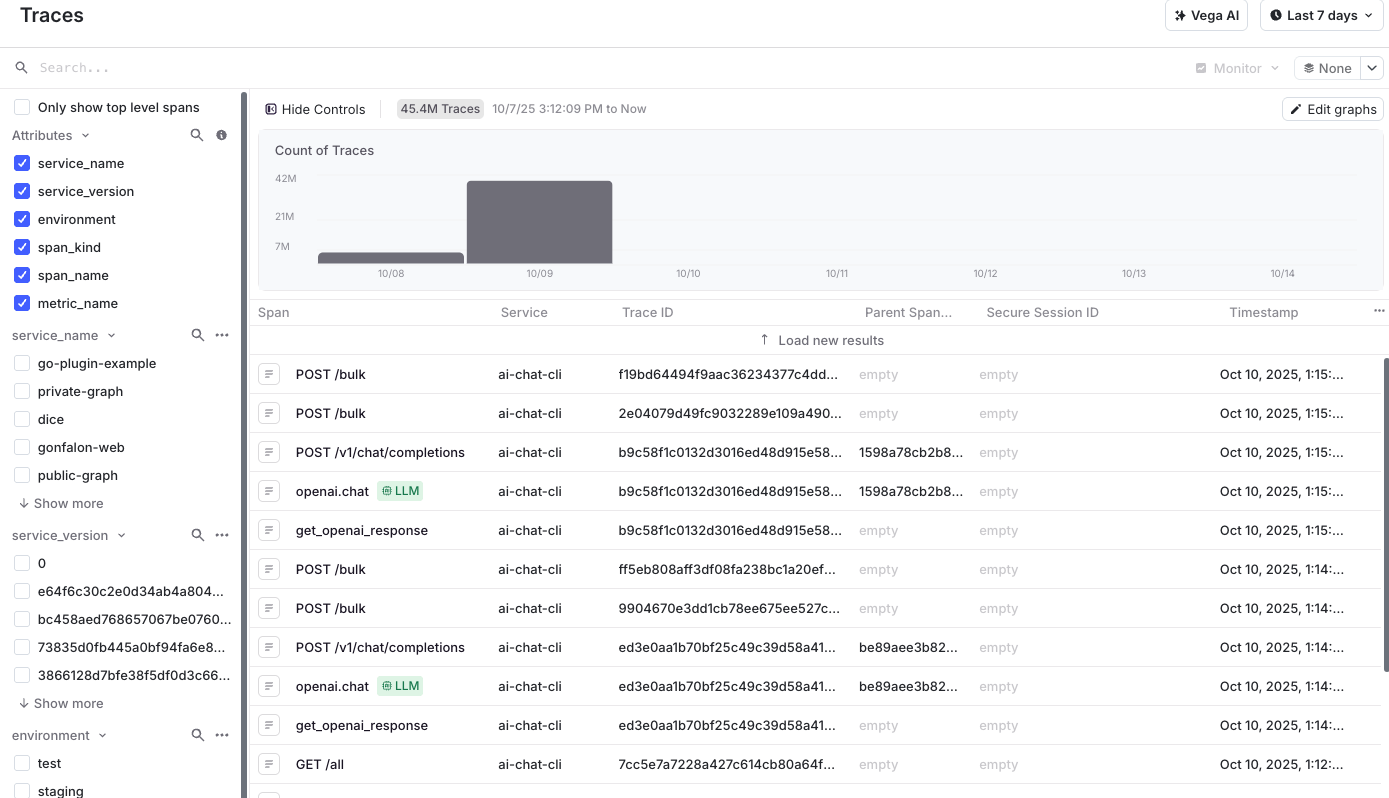

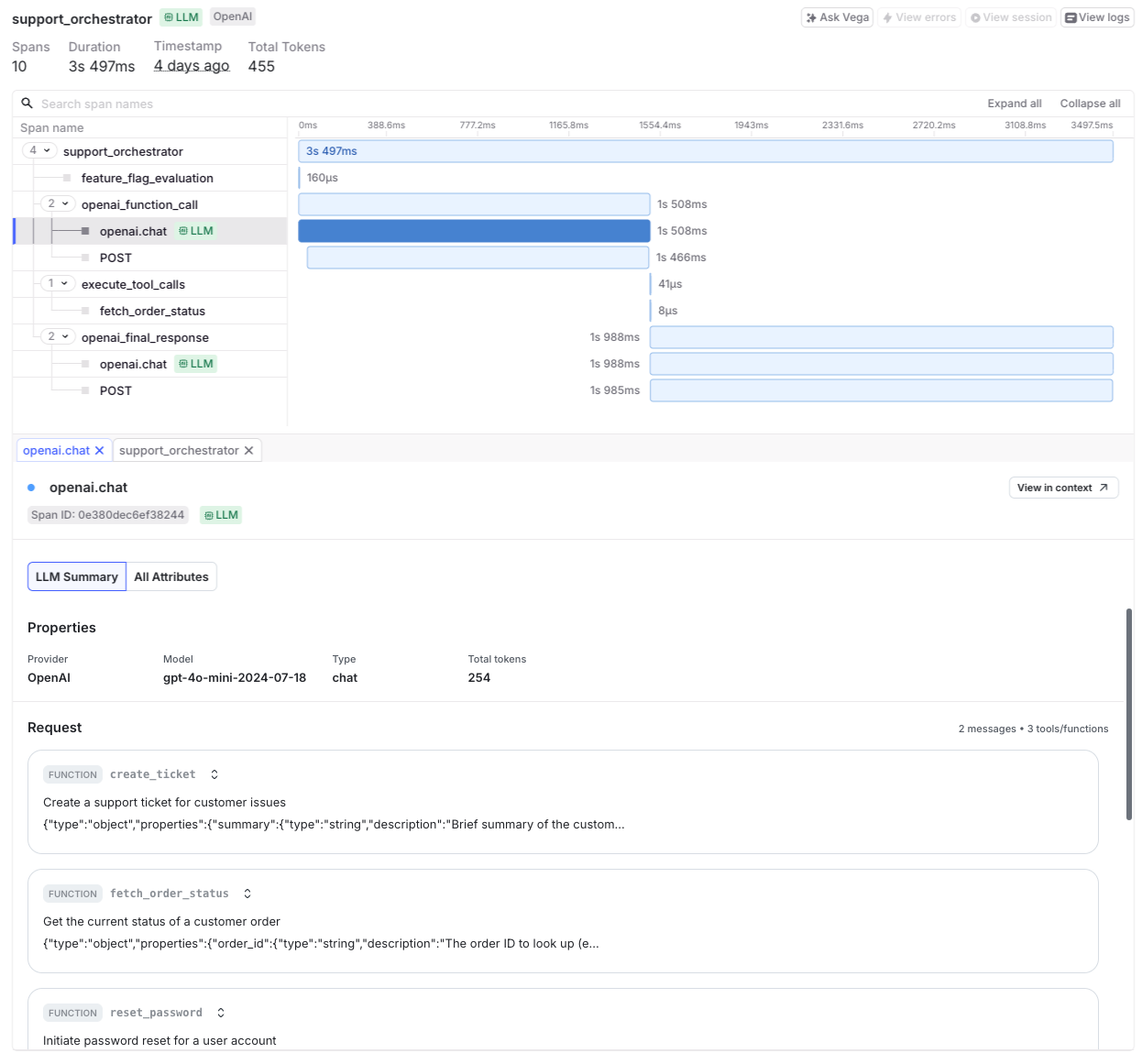

When you navigate to “Monitor” in LaunchDarkly and click Traces, the traces page displays a list of spans based on the filters you have enabled. If a span was generated by an LLM, it is marked with a green LLM symbol. Selecting a span opens a detailed view with the same interface as other observability tools. The Summary tab highlights model-specific telemetry such as prompts, responses, token counts, and provider details. The All attributes tab displays the raw span attributes received by LaunchDarkly.

Prerequisites

Before you set up LLM observability, make sure you have the following prerequisites:

- Access to LaunchDarkly observability.

- An application that uses an LLM provider supported by OpenLLMetry.

- A LaunchDarkly SDK key for your environment. The LaunchDarkly observability SDK is required for LaunchDarkly to receive and display spans.

- Policies for handling sensitive data, because prompts and responses may include personally identifiable information (PII).

Initialize the LaunchDarkly SDK first

You must initialize the LaunchDarkly SDK before you register any OpenLLMetry instrumentations. The initialization order determines how LaunchDarkly captures and exports spans. If you register OpenLLMetry before the LaunchDarkly SDK starts, LaunchDarkly may not receive or display LLM spans.

Install and configure both the LaunchDarkly observability SDK and OpenLLMetry. The observability SDK sends spans to LaunchDarkly, and OpenLLMetry captures LLM activity from your provider.

Set up LLM observability

Initialize the LaunchDarkly SDK first

You must initialize the LaunchDarkly SDK before you register any OpenLLMetry instrumentations. The initialization order determines how LaunchDarkly captures and exports spans. If you register OpenLLMetry before the LaunchDarkly SDK starts, LaunchDarkly may not receive or display LLM spans.

The order in which you initialize your SDKs and libraries is important for LLM observability. Follow this sequence whenever you configure instrumentation.

- Initialize the LaunchDarkly SDK. Do this before you register any OpenLLMetry instrumentation, or LaunchDarkly may not capture LLM spans.

- Initialize OpenLLMetry for your provider. OpenLLMetry must load before the provider library so that it can instrument it.

- Initialize the LLM provider. After OpenLLMetry is initialized, it can patch the provider to capture observability data.

Set up LLM observability to connect your application to LaunchDarkly and begin collecting LLM span data. LLM observability requires two components:

- LaunchDarkly observability SDK: enables telemetry export from your service to LaunchDarkly.

- OpenLLMetry provider instrumentation: automatically captures spans and metadata from your LLM provider.

Follow these steps to configure LLM observability in your application. The examples that follow show JavaScript and Python, but you can complete the same steps in any supported language.

- Install the required LaunchDarkly SDK and OpenLLMetry packages for your language. OpenLLMetry handles instrumentation of your LLM automatically and you do not need to install or configure OpenTelemetry unless needed by your application.

- Import or include the LaunchDarkly SDK and OpenLLMetry in your application.

- Initialize the LaunchDarkly SDK before registering any OpenLLMetry instrumentations. Include the observability plugin to export telemetry to LaunchDarkly. Initializing the AI SDK is optional and only required if you use AI Config or other AI-related APIs.

- Initialize OpenLLMetry before importing any LLM modules so it can automatically detect and instrument the provider SDK. Register provider-specific instrumentations such as OpenAI or Anthropic as needed by your application.

- Run your application and verify that LaunchDarkly displays incoming LLM spans on the traces page.

After you complete these steps, LaunchDarkly observability begins displaying LLM spans, marked with a green LLM symbol, on the traces page.

Example: JavaScript

This example shows how to instrument a JavaScript application using OpenLLMetry-JS provider instrumentations.

Install the instrumentation for your provider, such as OpenAI:

Example: Python

This example shows how to instrument a Python application using provider-specific OpenLLMetry packages. Installation and usage depend on the provider used by your application. Installation instructions for each provider are available in the OpenLLMetry packages directory.

Install the instrumentation for your provider, such as Anthropic:

Supported OpenLLMetry packages and languages

LaunchDarkly supports OpenLLMetry instrumentation packages for generative AI providers, such as those listed in the OpenLLMetry packages directory. Only spans emitted by these OpenLLMetry packages are processed and displayed in LaunchDarkly Observability.

OpenLLMetry supports JavaScript and Python. Other open-source LLM instrumentation SDKs may not emit spans in the format that LaunchDarkly can process or display. LaunchDarkly follows the OpenLLMetry semantic attribute structure, and instrumentation from unsupported SDKs may not be captured or displayed accurately.

View and analyze LLM spans

After LaunchDarkly begins ingesting spans, navigate to “Monitor” and click Traces to explore them. The traces page lists spans based on the filters you have enabled. Selecting an LLM span opens a detailed view with model-specific telemetry.

Use the following controls to analyze spans:

- Search bar: query spans by name, model ID, duration, or provider.

- Filters: focus on spans by attributes such as service name, version, environment, span kind, or metric type.

- Time range selector: select a range to view live, recent, or historical spans.

The trace detail panel includes:

- A hierarchical timeline showing generation steps, tool invocations, and model calls.

- Metadata such as provider, model name, latency, and token usage.

- Events representing prompt and response pairs.

- Provider error or exception events, if any.

LLM spans include standardized attributes emitted by OpenLLMetry, such as:

gen_ai.model: the name of the model that handled the request.gen_ai.prompt_tokens: the number of input tokens processed.gen_ai.completion_tokens: the number of tokens in the output.gen_ai.input: the input prompt text.gen_ai.output: the generated response.duration: the total latency of the span.service.name: the name of the emitting service.

Use the search bar to query spans by these attributes. For example:

Use cases for LLM observability

LLM observability provides insight into the behavior and efficiency of your AI systems. Use it to:

- Optimize latency and cost: monitor model performance and token usage to improve efficiency.

- Identify runtime errors: correlate failed generations with error logs or provider exceptions.

- Validate model outputs: review prompt and response pairs for accuracy and consistency.

- Correlate with user activity: connect LLM spans with session or error data to understand downstream effects.

Privacy and data handling

LLM observability may include input and output text as span attributes. This data can contain PII depending on your application. Review your organization’s data-handling policies before enabling LLM observability, and redact sensitive data at the source if required.